Bayesian inference focuses on estimation of model parameters. But what if we want to estimate a future outcome value? This is one of the goals of Bayesian predictions.

Bayesian predictions are outcome values simulated from the posterior predictive distribution, which is the distribution of the unobserved (future) data given the observed data. They can be used as optimal predictors in forecasting, optimal classifiers in classification problems, imputations for missing data, and more. They are also important for checking model goodness of fit.

The new bayespredict command computes Bayesian predictions. Also new are bayesreps for computing Markov chain Monte Carlo (MCMC) replicates of outcome variables and bayesstats ppvalues for computing posterior predictive p-values, all of which are based on Bayesian predictions and used for model diagnostic checks.

First, you fit a model. Here is a Bayesian linear regression fit using bayesmh:

. bayesmh y x1 x2, likelihood(normal({var}))

prior({y:}, normal(0,100)) prior({var}, igamma(1,2))

To compute Bayesian predictions for outcome y and save them in ysimdata.dta, type

. bayespredict {_ysim}, saving(ysimdata)

These simulated values can be used to perform model diagnostic checks or to make forecasts if you change the values recorded in x1 and x2 or add new values for them.

Bayesian prediction differs from frequentist prediction. Prediction, in a frequentist sense, is a deterministic function of estimated model parameters. For example, in a linear regression, the linear predictor, which is a linear combination of estimated regression coefficients and observed covariates, is used to predict values of continuous outcomes. Bayesian predictions, on the other hand, are simulated outcomes (or functions of them) and are thus stochastic quantities. (Remember to specify a random-number seed for reproducibility when computing Bayesian predictions!)

Unlike classical prediction, which produces a single value for each observation, Bayesian prediction produces an MCMC sample of values for each observation. If you have n observations and your MCMC sample size is T, the result of Bayesian prediction is a T × n dataset. Thus, bayespredict saves Bayesian predictions in a separate file.

Instead of an entire MCMC sample of outcome values, you can use bayesreps to obtain a random subset of MCMC replicates and save them as new variables in your current dataset. These are useful, for instance, for quick visual comparison of the simulated data with the observed data.

. bayesreps yrep*, nreps(10)

You may also be interested in various summaries of MCMC outcome values such as posterior means or medians. You may compute those (and more) and save them as new variables in your current dataset. For instance,

. bayespredict pmean, mean

computes the posterior mean of y across MCMC values for each observation and stores the results in the new variable pmean.

Finally, you can compute functions of Bayesian predictions such as test statistics and test quantities (test statistics that also depend on parameters). These are also useful for model checks, and we can use bayesstats ppvalues to compute posterior predictive p-values for them such as for the minimum and maximum statistics below:

. bayesstats ppvalues (ymin: @min({_ysim})) (ymax: @max({_ysim})) using ysimdata

The above commands are available after Bayesian models fit using bayesmh.

There are more features, and we demonstrate them in the examples below.

Let's fit the CES production function from Example 1 of [R] nl using bayesmh. This function models the log of output from firms as a nonlinear function of their capital and labor usage.

. webuse production

. bayesmh lnoutput = ({b0}-1/{rho=1}*ln({delta=0.5}*capital^(-1*{rho})+(1-{delta})*labor^(-1*{rho}))),

likelihood(normal({sig2}))

prior({sig2}, igamma(0.01, 0.01))

prior({b0}, normal(0, 100))

prior({delta}, uniform(0, 1))

prior({rho}, gamma(1, 1))

block({b0 delta rho sig2}, split)

rseed(16) saving(ces_mcmc)

Burn-in ...

Simulation ...

Model summary

| Equal-tailed | ||||

| Mean Std. Dev. MCSE Median [95% Cred. Interval] | ||||

| b0 | 3.829453 .1047645 .0059 3.829384 3.633927 4.027828 | |||

| delta | .4830306 .0636595 .001283 .4820599 .3540333 .6146103 | |||

| rho | 1.838675 .8665057 .057343 1.624436 .8088482 4.053971 | |||

| sig2 | .3098183 .043935 .001009 .3062381 .233141 .4054633 | |||

The nonlinear specification of bayesmh is similar to that of nl. But in our Bayesian model, we additionally specify prior distributions for model parameters. We use mildly informative priors for the parameters. To improve sampling efficiency, we simulate parameters in separate blocks. We also save the MCMC results in ces_mcmc.dta—this step is required before using bayespredict.

Suppose that we would like to compute the value of log output given the capital and labor values of 1.5 and 2, respectively. First, we add a new observation that will record the new values for capital and labor.

. set obs 101 number of observations (_N) was 100, now 101 . replace capital = 1.5 in 101 (1 real change made) . replace labor = 2 in 101 (1 real change made)

Next, we use bayespredict with the mean option to compute the posterior mean estimate for each observation in the dataset and save them in the new variable pmean. We also specify a random-number seed for reproducibility.

. bayespredict pmean, mean rseed(16) Computing predictions ...

We list the last 11 observations to compare the observed and predicted values of lnoutput.

. list labor capital lnoutput pmean in 90/101

| labor capital lnoutput pmean | |

| 90. | .0667528 .8334751 1.360945 1.525867 |

| 91. | 1.660983 .2120817 2.772991 2.700305 |

| 92. | 1.761712 5.48467 4.748453 4.717113 |

| 93. | .3323506 .2172508 1.994157 2.489211 |

| 94. | .2826679 .989325 3.231169 2.891441 |

| 95. | 1.330708 1.475331 4.571402 4.161445 |

| 96. | .4023916 .2614668 2.737468 2.667645 |

| 97. | 3.801785 .6329353 3.619768 3.786168 |

| 98. | .3353206 .2557932 2.292713 2.584241 |

| 99. | 31.5595 2.72437 5.026271 5.275726 |

| 100. | 3.80443 .946865 3.363704 4.150184 |

| 101. | 2 1.5 . 4.358272 |

The predicted posterior means are similar to the observed values of lnoutput in the existing observations. The predicted value of log of output in the new observation is 4.36.

If desired, we can compute credible intervals to form inference about our predicted value.

. bayespredict cril criu, cri rseed(16) Computing predictions ... . list pmean cril criu in 101

| pmean cril criu | |

| 101. | 4.358272 3.257501 5.497338 |

The 95% credible interval for the new observation is [3.26, 5.50].

We now drop the new observation and proceed with further analysis.

. drop in 101 (1 observation deleted)

In addition to predicting new outcome values, Bayesian predictions are useful for model checking. These checks, also known as posterior predictive checks, amount to comparing the observed data with the so-called replicated data. Replicated data are data simulated from the fitted model or, more precisely, from the posterior predictive distribution using the same covariate values as were used for estimation. The comparisons may be done graphically using, for instance, histograms or formally using posterior predictive p-values.

Let's compare the distributions of the observed and replicated data. The full replicated data will contain 10,000 MCMC replicates for lnoutput. Graphical comparison of so many replicates is not feasible. Instead, we can randomly generate a smaller subset of MCMC replicates and evaluate those. Here we will create only three replicates for simplicity.

. bayesreps yrep*, nreps(3) rseed(16) Computing predictions ... . list lnoutput yrep1 yrep2 yrep3 pmean in 1/10

| lnoutput yrep1 yrep2 yrep3 pmean | |

| 1. | 2.933451 3.496267 1.416066 3.85218 3.111261 |

| 2. | 4.613716 4.794032 3.46249 4.354308 4.484845 |

| 3. | 1.654005 2.067956 2.135827 1.949141 2.026532 |

| 4. | 2.025361 2.568239 2.233599 2.780148 2.224722 |

| 5. | 3.165065 2.979953 2.179758 3.609532 2.894845 |

| 6. | 1.372219 1.58399 2.10996 2.931538 2.345947 |

| 7. | 2.92082 4.086907 3.160853 3.570297 3.255964 |

| 8. | 2.698994 1.730741 1.845746 2.216033 2.281841 |

| 9. | 1.197918 1.61528 1.039314 1.529847 1.224741 |

| 10. | 3.097216 2.280563 2.774098 2.799236 2.76704 |

bayesreps generates three new variables: yrep1, yrep2, and yrep3, which contain MCMC replicates. pmean is essentially the sample mean of all 10,000 MCMC replicates.

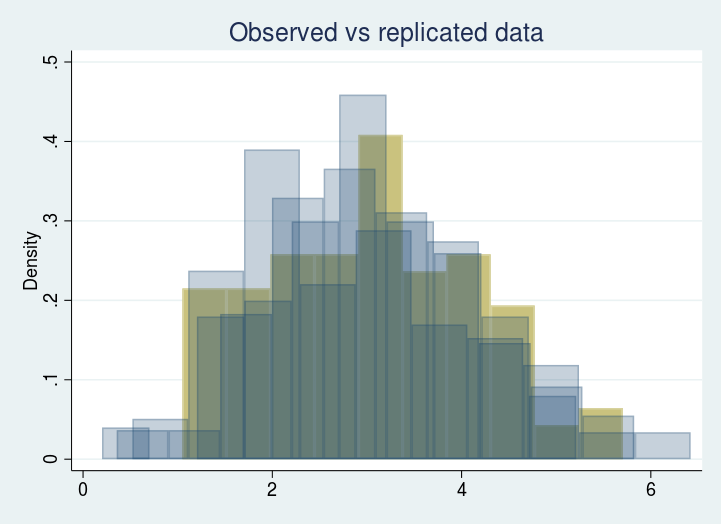

We can compare the histograms of the replicated data (in blue) with those of the observed data.

. twoway histogram lnoutput || histogram yrep1, color(navy%25) || histogram yrep2, color(navy%25) || histogram yrep3, color(navy%25) legend(off) title(Observed vs replicated data)

The distributions appear to be similar.

If needed, we can also simulate the entire 10,000 × 100 sample of predicted values. We save it in a separate dataset, ces_pred.dta.

. bayespredict {_ysim}, saving(ces_pred) rseed(16)

Computing predictions ...

file ces_pred.dta saved

file ces_pred.ster saved

. describe _index _ysim1_1 _ysim1_2 _ysim1_3 using ces_pred

storage display value

variable name type format label variable label

The dataset contains 10,000 observations and 100 variables, one for each observation from the original dataset. Above, we described only the first three out of 100 variables.

We can use bayesstats summary to compute posterior summaries for, say, the first 10 observations.

. bayesstats summary {_ysim[1/10]} using ces_pred

Posterior summary statistics MCMC sample size = 10,000

| Equal-tailed | |||

| Mean Std. Dev. MCSE Median [95% Cred. Interval] | |||

| _ysim1_1 | 3.111305 .564188 .005642 3.114486 2.013625 4.230432 | ||

| _ysim1_2 | 4.47848 .5647274 .006264 4.487651 3.362394 5.576457 | ||

| _ysim1_3 | 2.033675 .5562368 .005692 2.033444 .9360682 3.115028 | ||

| _ysim1_4 | 2.234464 .5692011 .005783 2.234235 1.130026 3.361621 | ||

| _ysim1_5 | 2.894212 .5655813 .005948 2.894129 1.789959 4.013839 | ||

| _ysim1_6 | 2.337328 .5614379 .00588 2.33384 1.226629 3.451852 | ||

| _ysim1_7 | 3.25311 .5660545 .005661 3.252063 2.126941 4.372151 | ||

| _ysim1_8 | 2.274426 .5638561 .005639 2.280058 1.157936 3.359114 | ||

| _ysim1_9 | 1.227687 .5582967 .005649 1.23153 .1239763 2.312214 | ||

| _ysim1_10 | 2.766746 .5644516 .005759 2.76776 1.655075 3.871556 | ||

The posterior mean estimates are similar to the ones we computed earlier and stored in variable pmean.

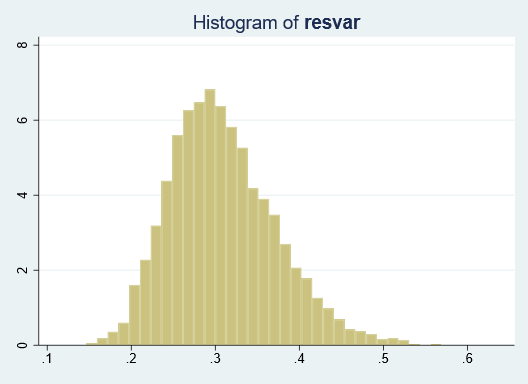

bayespredict also provides access to residuals. Below, we use bayesgraph histogram to produce a histogram of the variance of the residuals based on the MCMC sample. We use Mata function variance() to compute the variance of the residuals {_resid} for each MCMC replicate and label the results as resvar.

. bayesgraph histogram (resvar:@variance({_resid})) using ces_pred

The distribution has the mean of roughly 0.3, which is similar to the posterior mean estimate of the parameter {sig2}. And its shape resembles a chi-squared distribution.

We can compare the observed and replicated data more formally. We can use bayesstats ppvalues to compute posterior predictive p-values for various distributional summaries. For instance, we can compare the means, minimums, and maximums of the observed and replicated data.

One of the neat prediction features of Stata's Bayesian suite is the ability to specify functions of simulated values with any Bayesian postestimation command, including bayesstats ppvalues. We have already seen an example of this when we used bayesgraph histogram to plot the histogram for the variance of residuals. Here, we will use Mata functions mean(), min(), and max() to compute posterior predictive p-values for the corresponding summaries of the simulated outcomes.

. bayesstats ppvalues (mean:@mean({_ysim})) (min:@min({_ysim})) (max:@max({_ysim})) using ces_pred

Posterior predictive summary MCMC sample size = 10,000

| T | Mean Std. Dev. E(T_obs) P(T>=T_obs) | ||

| mean | 3.045143 .0787588 3.044554 .5026 | ||

| min | .5130189 .3401942 1.049675 .0365 | ||

| max | 5.84806 .3703789 5.703145 .626 | ||

Posterior predictive p-value, labeled as P(T>=T_obs) in the output, is the probability that the test statistic (such as, for instance, the minimum in our example) in the replicated data, T, is as or more extreme as the same statistic in the observed data, T_obs. When this probability is close to 0.5, the replicated and observed data are considered to be in agreement with respect to the test statistic of interest.

In our example, the mean and maximum statistics appear to be in agreement in the observed and replicated data, but not the minimum statistic.

Yet another neat feature of Bayesian predictions is that you can create your own functions and apply them to simulated values. You can write these functions as Mata functions, Stata programs, or both.

Let's write a Mata function to compute the skewness of a variable.

. mata:

| mata (type end to exit) |

And let's use a Stata program to compute a kurtosis of a variable.

. program kurt 1. version 16 2. args kurtosis x 3. quietly summarize `x', detail 4. scalar `kurtosis' = r(kurtosis) 5. end

Now, we can use these functions with bayespredict to compute skewness and kurtosis of simulated outcome values and save the results in a new dataset ces_teststat.dta.

. bayespredict (skewness:@skew({_ysim})) (kurtosis:@kurt {_ysim}), saving(ces_teststat) rseed(16)

Computing predictions ...

file ces_teststat.dta saved

file ces_teststat.ster saved

We named our predicted functions as skewness and kurtosis. We can use these names to refer to the functions from other postestimation commands.

One advantage of specifying functions directly with bayespredict instead of within other Bayesian postestimation commands is that we avoid creating a large dataset and save time by not recomputing the results each time we run another command. The disadvantage is that we will not be able to compute any other functions that require access to the full simulated dataset.

We use bayesstats summary to compute posterior summaries of the skewness and kurtosis measures.

. bayesstats summary {skewness} {kurtosis} using ces_teststat

Posterior summary statistics MCMC sample size = 10,000

| Equal-tailed | |||

| Mean Std. Dev. MCSE Median [95% Cred. Interval] | |||

| skewness | .1471358 .1660461 .00166 .1473484 -.1803233 .4741272 | ||

| kurtosis | 2.670391 .3049096 .003386 2.636845 2.163721 3.353066 | ||

And we can compute posterior predictive p-values for these measures too.

. bayesstats ppvalues {skewness} {kurtosis} using ces_teststat

Posterior predictive summary MCMC sample size = 10,000

| T | Mean Std. Dev. E(T_obs) P(T>=T_obs) | ||

| skewness | .1471358 .1660461 .1555046 .4806 | ||

| kurtosis | 2.670391 .3049096 2.252134 .9411 | ||

The replicated data fit the observed data well with respect to skewness but not kurtosis.

See New in Bayesian analysis for other new features in Bayesian analysis. Also see all Bayesian features.

Learn about Bayesian lasso modeling and How to run multiple chains in parallel.

Read more about Bayesian predictions and Bayesian analysis in the Stata Bayesian Analysis Reference Manual.